This event showed up on OW just a few days prior, but it was quite intriguing since this very bright event (the brightest of the year, perhaps) had its centerline through Santa Cruz. But the asteroid was tiny and the uncertainty limits meant there was only a 31% chance Kirk and I would get the occultation. After too many recent misses, I thought it was time the odds changed in our favor. Kirk was on board too. Karl was not able to try it due to local rain damage.

This would be quick; only 0.7 second long. I planned to use 1x (1/60s) and reduce it in field mode.

|

|

We've had a very uniformly heavy rain winter, but Jan 1 was the one clear day, and luck was with us. We had clear skies at the right time, and I found a spot near my driveway entrance where I calculated I could see the star, and also be shielded from the intense security lights nearby. My only error was in not having used my alarm clock since the standard time change, and I absent-mindedly didn't notice when I set it. After getting entirely set up with only 15 minutes to go, I looked at my watch and realized.. I was an hour early. Yikes. NO way I could go back to sleep and then get up again, as all my gear was open to any passerby with larcenous intent. So, instead, I used the hour to try and test the NightEagle 2. But, I forgot to take the f/3.3 out of the optical chain, and so it had both the 0.5x reducer and the 0.33 reducer in series, and could therefore not reach focus.

The time arrived, and I got a clean sharp successful 0.6 second event taped. Kirk, likewise, got a 0.7 sec event.

But, then the adventure began. My initial reduction in PyMovie showed a sharp upward spike after the D's field. I sent a query to the IOTA board, if this could be a Fresnel diffraction spike, since it clearly couldn't be due to duplicity, nor a 'graze', since the duration was so close to the central predicted duration. Kirk too, saw a spike, more on his R. I had a small spike on the R which I wrote off to just normal noise. However, the D spike was ruled out as diffraction, and then the detailed looking began, by me and Kirk as well, bringing in Tony George. Tony initially believed the software was not at fault and felt I must have done something wrong in my analysis. But no, I showed how the video itself showed no spike, but the spike existed only on the output field.

|

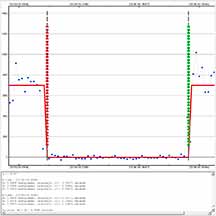

The excel csv output file from PyMovie, for the two different PyMovie analysis runs. The first one was the run in which I did not manually change the answer to the question in PyMovie as to which field was the first, but left it at its default of "tff". I called that csv file PyM. The second run I changed it to the proper "bff" and called that PyM2 csv file. The take-away from looking at this, is that there is a spurious spike caused by the field order being improperly reversed at the bottom of the D, but only on the first analysis. I'd thought this meant my PyM2 analysis would be correct. But later deeper look by Kirk Bender showed that regardless of how that question is answered, in field mode PyMovie randomly assigns the photometry to the correct, or incorrect field. A look at the video itself shows no spurious spike at the D, when stepped through field-by-field. So the video is fine, it's the csv file analysis of the video that has the errors. |

This shows field measurements near the beginning of the tape, not at the D or R, and shows how the field order between the two different PyMovie runs was randomly reversed. The left column is frame number and there are two for a given frame, one for each field. The photometric values are identical or almost identical for the corresponding proper field as should be obvious by the values (in middle columns), but those photometric values are put into the wrong field about half the time in a random way. |

I showed output to prove this, and Kirk did as well. Now, my next theory was that I had not told PyMovie the proper field order in time. It defaulted to "top field first in time", or "tff", but in fact it was obvious that the bottom field was first in time: "bff". When I manually told PyMovie it was "bff", the D spike disappeared, but my R spike remained. Kirk did more exhaustive testing and the conclusion was that PyMovie in field mode, and using 1/60s setting on the Watec 910hx then with apertures moving between even and odd line fields as the centroid of the objects moved, would "sawtooth" as the photometric data would randomly be assigned to the correct or the wrong field within the proper frame, depending on exactly how the aperture landed on the scan lines. The only way to avoid this mis-analysis was to use a static aperture which would not move as the star moved. Not good for any time length given telescope drift and seeing jitter. Or, since the fields are still listed on the .cvs file in correct time order, one could get proper data by not analyzing in field mode, but in frame mode so the fields were combined (but at lower time resolution). This, in fact, is how I analyze nearly all occultations, which usually involved much fainter stars. But this one was so short, and so bright, that the 1/60s (field time interval) and 'analyze in field mode' was the naturally best way to get the best time resolution. Now as of Jan 14, we are waiting for PyMovie to be repaired and re-issued before using it in analyzing in field mode for 1/60s events. Kirk frequently analyzes in field mode, however, even for fainter objects. He also, however, block integrates in PyOTE as proper. If there is integration involved, and integration happens in PyOTE, then it should still be OK. But for bright objects, we'll have to wait for a corrected version of PyMovie.

The solution for now, Kirk's confirmed that LiMovie does not suffer this malady, and will also output in field mode, and so was the clearer choice. I will also make this point... For a constant brightness star, it will be hard to tell just from the light curve if there is wrong field-assignment like this. The reason is because the fields, from Kirk's analysis, are randomly correct or incorrect, and only for the incorrect fields would an artificial sawtooth appear. But random wrong field assignment, as was clear on my own detailed image (which will post below), can be mimic'd by random seeing and photometric accuracy noise as well. So instead, the way to tell is this: during a clear disappearance or reappearance, then the sawtoothing would be obvious and unphysical. This is indeed what our light curves show. So, merely looking at a constant star will not convincingly show wrong field order. You'd have to instead look at the video field-by-field and compare to the photometric value in that field. When I do this I see errors in the PyMovie version, especially at the D and R where it is most obvious. Kirk too finds the same. See the table above.

PyMovie + PyOTE Analysis

First, I'll show the PyMovie reduction. I did 2 PyMovie analyses, and one LiMovie analysis. For this first table of images, these are the second PyMovie analysis. The second PyMovie analysis which began with manually specifying the proper field order and seemed to get a smoother D, but not R. It was Kirk who's experiments which showed that the field order pathology even happens after manually specifying the proper field order, if in 1/60s setting and also using 'analyze in field mode'. The only reasonable solution is to analyze in frame mode, but that would reduce timing resolution, so not ideal here. LiMovie was the best resolution.

|

My PyMovie screen capture. Note the saturated pixels |

The occultation in PyMovie, note the sawtoothing at the R. I'd thought it possible it was normal photometric noise, but the curve in LiMovie below is much smoother. |

PyOTE solution. The duration turns out nearly identical in PyMovie and in LiMovie analyses. Here 0.6139 sec vs .6198 sec using LiMovie |

LiMovie + PyOTE Analysis

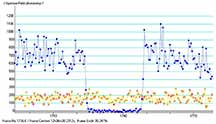

Kirk Bender did extensive testing and experimenting in both PyMovie and showed this field order pathology still exists even when specifying manually the proper field order. He did experimenting then with LiMovie, and LiMovie does a good job and can do analysis in field mode as well. Until PyMovie is fixed, LiMovie seems the best solution for events using 1/60s and analyzed in field mode. My new light curve looks excellent, and the 'sawtooth'ing seen in the PyMovie analysis and which I thought was within errors and might not be a sign of trouble, disappear and I get a very smooth disappearance and reappearance. The csv file generated by LiMovie can be analyzed in PyOTE without changes.

|

LiMovie screen capture, showing the setting I chose. |

The light curve. I did not set a "sky" aperture, as it seemed unnecessary |

The raw LiMovie photometry light curve for the tracking star (yellow) and the target star (blue). |

Then the .csv file was run through PyOTE. No integration set, and there were no clouds and no need to use a reference or use "appsum". Zero false positive. |

The PyOTE solution. Duration 0.6198 seconds, with high accuracy. Kirk Bender was only ~1.5 km away or thereabouts, but his duration was over 0.7 seconds. |

|

Same, zoomed in. |

The D, close up. The descent in values testifies to proper field order and the possibility to very good time resolution. However, I am concerned that PyOTE decided the time of D was not "mid-disappearance" as would be true if, as seems the case, the fading is due to stellar diameter. |

However, at the R, where the rise is also smooth and physically quite what you'd expect, PyOTE assigns the proper time not at the middle and not at the first point of rise, but rather closer to the final full brightness. Again, I am puzzled why this would be how PyOTE acts. |

To emphasize for others - there are two distinct issues this unique occultation event data has brought up:

Issue #1. The fact that PyMovie in field mode assigns the wrong photometry to the wrong field time roughly half the time. Not a photometric effect because the field-by-field look at the video shows proper brightening and fading without spikes seen in the PyMovie csv file and the light curve, but apparently something in the coding which should not be happening. I want to stress here, that this sawtoothing would only be unambiguously visible at the D and R moments. Why? Because the randomness of the error would be hard to differentiate from the randomness of seeing effects or other point to point effects. I do not trust that a look at the unocculted star would show this effect distinct from photometric noise. Now, because from data-point to data-point the center of the apertures, as they track the seeing and drift-affected centroids, would land on even or odd scan lines randomly and therefore be assigned to random fields in the current PyMovie. Kirk Bender finds that it is this effect which determines which field is assigned the given photometry measurement. But instead, the photometry should be assigned not to the field that happens to have the centroid of the star on an even or odd line, since the star in fact spills over many scan lines. This is especially true for this event, when it was deliberately de-focused to minimize saturation. Saturation, by the way, is still evident during the reduction as seen by the red pixels during PyMovie. The PyMovie field order issue is being investigated by Tony and Bob now.

Issue #2. The PyOTE determined D and R times on proper field order'ed data (i.e. good data) differ from what the physics says should be right. No diffraction effect is evident in this light curve data, but insead it looks more like a finite stellar diameter being big enough to take roughly ~1/25 second to complete the occultations, giving ~2 or 3 fields for the D and again for the R to complete. Again, if there were just a single intermediate photometric point, it would most likely be due to the actual instantaneous event happening part way through a field or frame, as would be typically expected. But there appear to be more than single intermediate points in this event, suggesting stellar diameter is the cause. If so, then the proper time of D and R is the mid-point of the fading, and mid-point of the brightening. I would expect PyOTE to set its red and green determinations at those points, but that is not what we see here. I've not yet heard a plausible answer.

LiMovie log file for Nolthenius. I observed from my driveway.

PyMovie + PyOTE Analysis

First, his analysis in PyMovie, in which PyMovie placed the photometric value about half the time into the wrong field, in a random way depending on whether the aperture as it re-centered, was positioned on an even, or an odd scan line. The evidence is the sawtooth'ing at D and R, where the field values are plotted in the wrong order within the frame's values. The star is bright enough that the values (after perhaps a first brighter-than-unocculted spike due to diffraction, which was not seen) should uniformly decline to the minimum and then random noise about that value, then uniformly rise for the R.

|

|

|

LiMovie + PyOTE Analysis

Here's is Kirk's analysis using LiMovie, then PyOTE. Note that the spurious "sawtoothing" at D and R as seen in the PyMovie analysis is gone at the D and R. There is a second and unrelated issue, however, which interests me. For both Kirk and I, the star needs two descending points to get to through the D, and also for the R. An instantaneous D and R should have at most 1 intermediate value. If the purpose is to determine the D and R at the geometric center of the star in line with the asteroid limb, then for both Kirk and I PyOTE seems to assign this too late, only at the very bottom of the D. I'd suspect the R showed some diffraction spike, but it's not high enough above later points to be confident of that.

|

|

|

PyOTE log file from Bender, for the LiMovie + PyOTE analysis. Kirk observed from his driveway, about a km north of my track.